Langton's Ant is the first bot programming I tried on CodinGame. I did so because I wanted to learn Monte Carlo Tree Search (MCTS) and this bot programming was tagged with MCTS. Since then, I have improved my understanding of MCTS and practiced a lot of bot programming on CodinGame. Let us see if I can improve my ranking on Langton's Ant!

Please note that the code I share in this article is not what I would call production-ready code. On the CodinGame platform, you cannot activate compiler optimizations. Thus, any use of the STL is prohibitive as it is really slow when used without compiler optimizations. Also, you have to get rid of any unnecessary abstraction and write low-level code. Still, I believe that the shared code can constitute a nice basis to write bots for board games on CodinGame.

1. Initial Situation - a Black Box Ranked #8

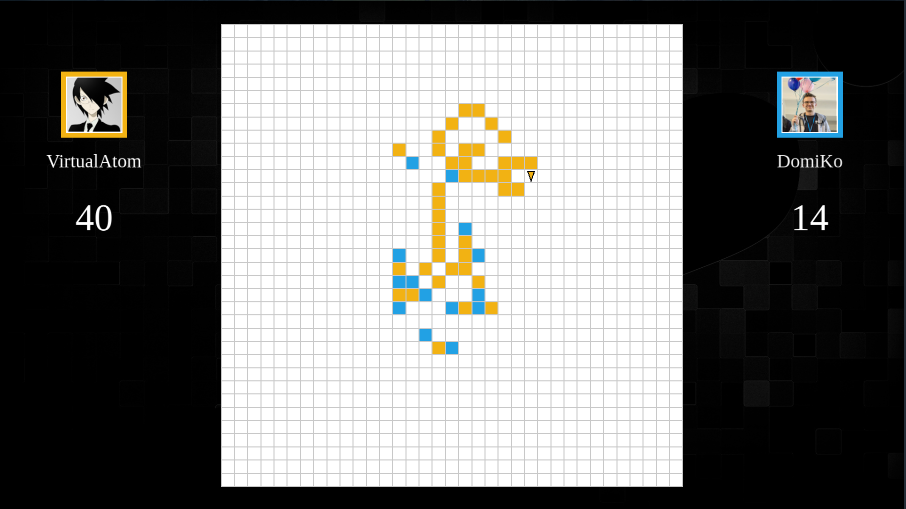

I opened the leaderboard of Langton's Ant and noticed that my bot is currently ranked #8 (see table below). I was excited at first: since I reached such a ranking with limited knowledge of MCTS and bot programming, I was expecting to go much higher after 8 months of training 😎. However, once I read the names of players above me, I did not stay happy for long 😑. Those people are highly ranked CodinGamers in the global leaderboard: Redstrike is #38, MSmits is #28, EricSMSO is #10, _Royale is #2, DomiKo is #42, eulerscheZahl is #1, and ValGrowth is #22. It will not be easy to beat them.

| CodinGamer | TrueSkill | Submission Date | |

|---|---|---|---|

| 1 | Redstrike | 47.46 | 2019-06-16 |

| 2 | MSmits | 46.87 | 2020-03-15 |

| 3 | EricSMSO | 42.58 | 2019-06-04 |

| 4 | _Royale | 42.17 | 2020-02-26 |

| 5 | DomiKo | 41.11 | 2020-10-14 |

| 6 | eulerscheZahl | 41.08 | 2020-09-07 |

| 7 | ValGrowth | 39.22 | 2020-06-17 |

| 8 | VirtualAtom | 38.34 | 2020-05-05 |

| 9 | Apfeloxid | 38.29 | 2019-04-29 |

| 10 | dbdr | 37.44 | 2020-09-08 |

I sought comfort in looking at my bot's code. I thought I had missed easy optimizations or heuristics that would improve my bot a lot. This represented yet another disappointment. My code was an incomprehensible black box, it would be easier to restart from scratch than refactor the code to meet my current standard. The code can still be used as a reference to create an opponent in an arena.

2. Langton's Ant Rules

This bot programming is a two-player board game, played in two rounds.

The first player has the gold color, and the second player has the blue color.

The board is composed of N x N tiles where N is an odd number in [25, 35].

A round starts with the board completely white.

Players alternatively choose a white tile and color it with their color, until there are 2×T colored tiles (T gold tiles and T blue tiles),

for T in [15, 25].

Then a Langton's ant is placed in the middle of the board, facing upward, colored with the first player color.

The ant moves L times, for L in [100, 200], following these rules:

- if the ant is on a colored tile, it becomes of the tile's color, then the tile becomes white, the ant turns left and moves forward on the next tile

- if the ant is on a white tile, the tile becomes of the ant's color, then the ant turns right and moves forward on the next tile

Once the ant moved L times or exited the board, the round is over.

The score of each player is the number of tiles of his color.

Since this game favors the second player, another round is played where the gold player plays second and the blue player plays first.

The scores of the two rounds are added and the winner is the player with the highest score.

3. A Better Bot Version

MCTS is a nice algorithm for board games. I would not fully describe it, as I doubt I could do it properly. If you are not familiar with this algorithm yet, I invite you to start with the Wikipedia page. Also, make sure to read the article Monte-Carlo Tree Search Solver as it is a generally good improvement.

I went for a generic MCTS implementation with two improvements.

The first one is MCTS Solver, as I expected it could improve my decisions near the end of a round.

The second one is tree reuse: the search tree is kept in memory to maximize the exploitation of data obtained so far.

Here are my node and move structures:

namespace mcts {

struct Node {

static constexpr float unknown_outcome = -1000.f;

inline constexpr void init( uint32_t parent_index ) noexcept {

parent = parent_index;

left_child = 0;

visits = 0.f;

sum = 0.f;

outcome = unknown_outcome;

child_count = 0;

x = 0;

y = 0;

}

uint32_t parent;

uint32_t left_child;

float visits;

float sum; // from the parent perspective

float outcome; // from the parent perspective (unknown_outcome if not solved)

uint16_t child_count;

uint8_t x, y; // from the parent perspective

};

struct Move {

uint8_t x, y;

};

static constexpr uint32_t preallocated_node_count = 33000000;

Node nodes[ preallocated_node_count ];

uint32_t used_nodes = 0;

static constexpr uint32_t preallocated_move_count = 1225;

Move preallocated_moves[ preallocated_move_count ];

uint32_t used_moves = 0;

// Initialize MCTS global data

inline void initialize( uint8_t posing_turns, uint8_t ant_turns ) noexcept {

used_nodes = 1;

nodes[0].init( 0 );

}

}As always in my source code for CodinGame, objects are stored into global contiguous stack buffers. Since they are global, I do not have to pass them around in every function I write, which helps to reduce the parameter list and eases the development. Because it is contiguous, the performance will likely benefit from cache hits. Finally, because it is in the memory stack, I do not have to pay for the runtime overhead of memory allocation. The downsides are that I have to fit everything in the 768Mo memory limit of the platform and also that I have to manage memory myself.

The remaining of the MCTS implementation has the following organization:

namespace mcts {

Result search( const Params& params ) {

Result result;

while( nodes[ params.start_node ].outcome == Node::unknown_outcome && timer::elapsed() < params.time_limit ) {

// reset the search to current state

Board board{ params.state.board };

uint32_t node_index = params.start_node;

// selection

while( !board.complete() ) {

auto current = nodes[ node_index ];

if( current.child_count == 0 ) break;

NodeSelecter selecter{ current }; // customization point 1: how to select a path in the tree?

for( uint16_t i = 0; i < current.child_count && !selecter.finished(); ++i )

selecter.add( current.left_child + i );

node_index = selecter.best_node_index();

board.play( nodes[ node_index ].x, nodes[ node_index ].y );

}

// expansion

if( !board.complete() && nodes[ node_index ].child_count == 0 ) {

compute_moves( board ); // customization point 2: how to choose moves to consider?

for( uint32_t i = 0; i < used_moves; ++i ) {

auto& child = nodes[ used_nodes + i ];

child.init( node_index );

child.x = preallocated_moves[ i ].x;

child.y = preallocated_moves[ i ].y;

}

nodes[ node_index ].left_child = used_nodes;

nodes[ node_index ].child_count = used_moves;

used_nodes += used_moves;

node_index = nodes[ node_index ].left_child + fastrandom::choice( nodes[ node_index ].child_count );

board.play( nodes[ node_index ].x, nodes[ node_index ].y );

++result.expanded_nodes;

}

// evaluation by rollout

bool solved = board.complete() || nodes[ node_index ].outcome != Node::unknown_outcome;

float reward = evaluate_board( board ); // customization point 3: how to evaluate a board/perform a rollout?

if( solved ) nodes[ node_index ].outcome = convert_to_outcome( reward );

++result.rollouts;

// backpropagation + solver

while( true ) {

auto&node = nodes[ node_index ];

++node.visits;

node.sum += reward;

if( solved && node.child_count ) {

bool all_solved = true;

uint32_t best_index = 0;

for( uint32_t i = node.left_child, end = i + node.child_count; i < end; ++i ) {

if( nodes[ i ].outcome == Node::unknown_outcome )

all_solved = false;

else if( best_index == 0 || nodes[ i ].outcome > nodes[ best_index ].outcome )

best_index = i;

}

if( best_index && all_solved )

node.outcome = -nodes[ best_index ].outcome;

else solved = false;

}

if( node_index == params.start_node ) break;

node_index = node.parent;

reward = 1.f - reward;

}

}

// choose the best found node

auto& root_node = nodes[ params.start_node ];

BestNodeEvaluation evaluation; // customization point 4: which child to play?

for( uint32_t i = 0; i < root_node.child_count; ++i ) {

auto& child = nodes[ root_node.left_child + i ];

BestNodeEvaluation this_node_evaluation{ child };

if( this_node_evaluation > evaluation ) {

evaluation = this_node_evaluation;

result.best_child = root_node.left_child + i;

result.best_score = evaluation.score();

}

}

result.x = nodes[ result.best_child ].x;

result.y = nodes[ result.best_child ].y;

result.outcome = nodes[ result.best_child ].outcome;

return result;

}

You can notice 4 customization points that are not given in the previous code. The first one is the tree node selection during a step of the search. I used something standard for this, with Upper Confidence bounds applied to Trees (UCT) that balances exploration (getting new data) and exploitation (using acquired data). If a node has never been explored, the selecter will select it and immediately stop the selection. For the last customization point, I also used something standard. The evaluation picks:

- the solved node with the highest outcome if it exists

- otherwise the node with the highest number of visits

- or the node with the highest rewards sum if there are multiple nodes with the maximal number of visits

The second customization point which chooses which moves to consider is quite important in this game.

As N can be equal to 35, there are up to 1225 = 35×35 tiles.

For a board of maximal size, if we consider all possible moves, the first MCTS node has 1225 children, each child has 1224 children.

This represents almost 15 million nodes for only two colored tiles, while there can be up to 70 colored tiles.

We cannot consider all possible moves, we need a heuristic to choose only a subset of interesting moves.

I used here the same heuristic as my previous bot which is computation heavy but gave me good results in the past.

The third customization point is quite simple, I perform a rollout with the same move selection heuristic as in the expansion step.

Once there are 2×T colored tiles, the moves are simulated and the score is computed.

The reward is the score of the final board divided by the maximum positive score and is offsetted by 0.5.

This way the reward goes from 0.0 (large defeat) to 1.0 (large victory), 0.5 represents a draw and any reward close to 0.5 represents a small defeat or victory.

After some unit tests for the board and MCTS, I wrote an arena to test my different bot versions.

The previous version did not have MCTS solver and tree reuse but has an additional feature.

I was not sure this feature improved the overall quality of the bot, so I have made a version without this feature and let the two versions fight in the arena.

After 350 matches in the arena, the version with that feature had a win rate of 51.23 ± 2.7%.

So it did improve the win rate but not significantly to try to implement this feature in the new version.

Instead, I let the new version, let us call it v02, and the previous version, v01, fight in the arena.

After 100 matches, v02 had a win rate of 70.10 ± 4.5%.

I did not wait any longer and submitted v02 in the CodinGame's arena.

Below are the results of that submission.

| CodinGamer | TrueSkill | Win Rate | Submission Date | |

|---|---|---|---|---|

| 1 | Redstrike | 47.93 | 00% | 2019-06-16 |

| 2 | MSmits | 46.59 | 67% | 2020-03-15 |

| 3 | EricSMSO | 43.01 | 00% | 2019-06-04 |

| 4 | _Royale | 42.16 | 50% | 2020-02-26 |

| 5 | VirtualAtom | 41.73 | 2020-12-29 | |

| 6 | eulerscheZahl | 41.50 | 29% | 2020-09-07 |

| 7 | DomiKo | 41.25 | 75% | 2020-10-14 |

| 8 | ValGrowth | 39.06 | 83% | 2020-06-17 |

| 9 | Apfeloxid | 38.41 | 80% | 2019-04-29 |

| 10 | dbdr | 37.42 | 71% | 2020-09-08 |

The bot v02 was indeed better.

I had a look at cgstats to check my win rates and the number of matches against players.

During this submission, v02 was very weak against Redstrike with 10 defeats!

When I tried in the CodinGame IDE to fight against Redstrike (those matches do not count in the rankings), I won 4 out 5 times.

I think my bot is unstable and different submission matches could lead to a different ranking.

If I do another version, I will have to improve the stability.

Conclusion

In this article, I showed how to write an MCTS for Langton's Ant, a bot programming on CodinGame. With MCTS solver, tree reuse, and a heuristic to select which moves to consider, the bot finished at place #5.

I have always thought that the weak part of my bot is the move heuristic. I tried some other heuristics, but they lead to worse results. Opponents seem to use another heuristic since they play moves my bot did not consider (see table below). As a consequence, my bot has to reset the search tree, losing any collected information, to start the search in a new branch. Also, a performance profile showed me that my current heuristic represents 90.77% of the MCTS runtime. If I find a heuristic almost as good as the current one but less computation-heavy, I would be able to explore more game states and play better moves.

| CodinGamer | Expected Move |

|---|---|

| Redstrike | 73.7% |

| MSmits | 80.8% |

| EricSMSO | 85.1% |

| _Royale | 76.6% |

| eulerscheZahl | 90.8% |

Since you are now acquainted with MCTS, I recommend you trying the bot programming Ultimate Tic-Tac-Toe (UTTT) on CodinGame. You can achieve a high rank in UTTT with a simple MCTS, tuned for performance. To be promoted to the Legend league, you only need to improve your number of MCTS iterations per turn. Profile your bot, optimize it, and see you in Legend! 😎