Between October 4th and October 10th, CodinGame hosted an optimization challenge. I was busy working then, but I wanted to participate. Even though I lacked the time to do as much as I wanted, I was happy with my results and had a lot of fun. I'm sure I will continue to fiddle with my solution to this challenge! In this post, I'll describe my approach to this challenge, what helped me to get through it, and some recommendations.

1. Introduction

I was so excited to see an optimization challenge on CodinGame. Optimization is my favorite activity on the platform (see previous articles on Mars Lander and Golfing in brainf**k ^^). I love seeing immediately if a code change would increase my score, unlike multiplayer games/contests where the ranking instability makes it harder to guess if you are going in the right direction. I also enjoy fighting against myself or my code to get points rather than defeating other players' bots in matches.

It was the first optimization challenge I participated in. I knew I would need more tools or processes, but this challenge was the right occasion to acquire those. I focused on time efficiency: I wanted to get the best results for the number of hours I could spend on the challenge during that very busy period. Such efficiency is a common aspect of my work, so I'm always keen to practice it.

As usual, as soon as I discovered the contest details, I sketched a plan and followed it. It's a good habit to make decisions beforehand while I'm highly motivated, fully rested, and with the most brain power. Here are the main lines of my plan:

- First, write a dumb generic greedy algorithm that achieves 100% success on test cases, using a one-tube pod between an input node and its destination (see the next section where I define those terms).

- Then, improve this greedy algorithm with pods traveling on multiple tubes.

- The last step is to find manual solutions to some levels. Then, pick the hand-mand solution with the most significant improvement compared to the greedy algorithm and write a procedure implementing it. If it works, add a function to detect this level and call the procedure directly.

- Rule 1: Do not try to write a search algorithm that simulate pods or astronauts. The pathing algorithm, a CodinGame special ingredient, looks like a nightmare to implement. I won't have the brain power or the time to do it right, and I'm unlikely to write a search bot that would outperform my abovementioned approach.

- Rule 2: Fork the referee, tweak it, and make it do the work for me. I'm unfamiliar with Java, so I knew it would take some time to settle a development environment for it and then build and run a modified version of it. I was confident in the end it would be more than helpful.

- Rule 3: Add an optimizer for fetching possible tubes from a given node, but do not optimize other things. It is doubtful I'd ever need more runtime performance.

All I had to do during this contest was to commit to this plan, and I'm pleased with the results :-}.

2. The Game

The game involves transferring astronauts from landing pads to their destination lunar modules. To simplify, it is similar to transferring resources from input nodes to destinations in a network. Each non-input node has a type that determines which kind of resource it accepts. An input node can spawn resources of multiple types at the beginning of each turn. Whenever a resource reaches its destination, points are given depending on how long it took and how many resources already reached this node during a turn. There are 20 turns, and each of these turns lasts 20 days.

Tubes and teleporters connect the nodes of this network. A tube connects two nodes, and its cost is proportional to the distance between them. It is generally cheaper than teleporters but has a limited throughput. We can increase the throughput of a tube, but it quickly becomes costly. Resources circulate through tubes thanks to pods. A pod costs one-fifth of a teleporter, so it's a good habit to make it travel through multiple tubes to make them profitable. It takes one day for a pod to progress from one node to the next through a tube connecting the two. A node can have at most five tubes, two tubes cannot intersect, and a tube cannot cross a node; simple, no?

Teleporters are instantaneous but directional: it takes zero days for resources to go from the IN teleporter node to the OUT teleporter node, but resources cannot go through the teleport from the OUT node to the IN node. You cannot chain teleporters: once a node has a teleporter, either an IN or OUT gate, it cannot have another one. Still, when you must connect two sets/clusters of distant nodes, teleporters are very useful.

Pods were the most challenging part of the game for me. I did not bother to understand their behavior fully. A custom pathing system distributes resources between pods and selects where resources go. It uses shortest distances, resource identifier ordering, and other subtleties that made me write the rule #1 in my plan. I suspect CodinGame introduced this kind of behavior to make it less accessible to write a search bot and to promote heuristics that are more beginner-friendly. When search bots are easy to make, a challenge quickly becomes a code optimization process: the one that makes more iterations per turn wins. Anyone can write heuristics rapidly, which is more rewarding for beginners. The only thing about pods I considered was that I could destroy a pod to reclaim three-fourths of its creation price and reallocate this money elsewhere on the network.

Some nodes appear after the first turn, so an excellent optimized network could quickly become limited when those new nodes arrive. Another perturbation is that we do not know when or how much money we get per turn to extend/improve the network. A rule states that we get at least 10% of the funds remaining at the end of the previous turn. I also chose to ignore this rule.

3. Kick-starting

The first step was to parse the input and display information about the game state to understand better what was happening. That's not fun, but I only do it twice per game I'm working on. The first time is filling in the most straightforward state representation I could imagine. The second time is when I'm better aware of how I'll need to manipulate data, and I entirely rewrite my state representation. Thankfully, I have some reusable code in my CodinGame git repository to parse data in C++.

I thought about a simple algorithm to get me started with 100% completion.

I focused on the first input node of the state and picked the most spawned resource type for it.

My first bot iteratively searched for this type's closest, unconnected, connectable destination node from the input node.

The bot ended the turn when it found no more valid or affordable destination.

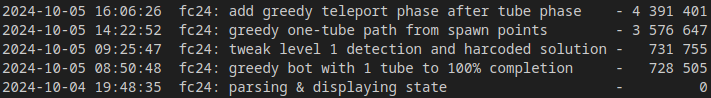

The submission score for such a greedy, myopic bot is 728 505 points.

Next, I fiddled with a manual solution for the first level to better understand pod creation/destruction and tube upgrade.

Then, I improved the bot to handle more than the first spawn node, always with a 1-tube pod, and reached 3 576 647 points.

I pushed a little more by adding a second phase in the bot, which searches for suitable teleporters connecting a spawn node to a destination node.

My score progressed to 4 391 401 points, not bad!

I quickly completed the first step of my plan. It did not take much time or effort, which was quite satisfying. My experience with graphs and having done Platinum Rift 2 and Ghost in the Cell games really helped.

4. Referee and Tooling

CodinGame has made it a habit to release the referee code on GitHub. You can then explore the code to understand the rules better, identify how points are computed, and ensure you know the range of possible values to be able to pack them in your state representation.

I forked that repository to address rule #2 (make the referee work for me) before dealing with the rest of the plan. I'm ashamed that I spent a lot of time setting up the dev environment to build the referee and its viewer and writing a Java program to test and validate my bots. I'm leaving here some hints in case you are also unfamiliar with these and want to do the same.

First, I created a class to execute my bot on the test sets and display the scores.

I adapted a typical pattern we saw in the previous contest referees' shared forks and read the CodinGame toolkit's code.

Part of my efforts are public in the CommandLineRunner.java file;

my remaining modifications are either too dirty to be published or too tied to my way of writing bots to be of use to others.

Next, I did my best to add that file to the build system (see the pom.xml file) and built the jar with a mvn package command in the repository root.

I tried to figure out why I could not use the viewer until I realized I needed to build the viewer before calling mvn.

This is done by executing tsc in the src/main/resources directory (the directory containing a package.json file).

Now I can build programs relying on the official referee and even tweak such referee, but why am I trying to do so?

In my Spring Challenge 2021 retrospective, I said that I borrowed an idea _Royale: make the referee validate my code.

People working with me know how much I like to let computers do part of my responsibilities, «If a computer can do it, I won't do it.»

I have assertion macros allowing me to verify the state and report failure.

When a failure happens, it breaks in the debugger or stops the bot immediately.

I format my report with a prefix (I use [DATA] , borrowed from Psyho if I recall correctly).

If the referee looks for this prefix in what my bot sends, the referee can alert me when something fails on a test case, at which turn, and on which level.

I add assertions to the state parsing code to compare with the result I expect from my commands.

I also add verifications in my algorithms, such as not trying to add a tube twice or creating a tube intersecting another, and so on.

Finally, I have a crash handler in my bot to report whenever a crash occurs, its reason and the call stack.

Thus, I only have to start my validation program on all levels to validate my bot.

All these assertions can be deactivated by a constexpr variable, which is a good thing to do when submitting the final bot.

With such tooling, I felt confident about rewriting my whole state representation and pursuing my plan.

5. Iterative Improvement Process

I knew I would need an optimizer to quickly return the valid nodes to connect a given node with a tube.

I decided to compute all the intersecting segments from the node positions.

There are at most 150 nodes, thus at most 11 175 segments.

I index the segment between A and B, with A > B, by I = A * (A - 1) / 2 + B.

For each segment I, I store a bitmask of all segments intersecting it intersecting[I].

Similarly, I store a buffer of bits allowed representing which segment is allowed, i.e., do not cross a node.

This data is updated whenever a new node is available and won't change during a turn.

I store the current tubes in a separate buffer tubes: this buffer can change frequently during a turn.

To check if a tube can be created between A and B, I compute its index I,

then check if this index corresponds to an allowed segment, and finally check tubes & intersecting[I].

There might be a better way to do it, but I never had to bother about performance or timeout during the contest.

At this point, we were past the weekend, and I had to code during my sleep time after work.

My brain power became a constraint I underestimated.

I managed to write a multi-tube greedy algorithm, but I still need to figure out how it works!

The bot is clearly limited but scored 5 052 457 points.

I knew it was possible to do much better but was unable to do so.

So I switched to the last step of my plan.

I wrote manual solutions for half of the levels.

For the levels where the handmade solution outperformed the greedy algorithm, I coded a specific algorithm to reproduce the approach of the handmade solution.

If I succeeded, I wrote a detection algorithm to identify the level from the inputs.

I did so for the first level (Example1), the third level (Balancing), the eighth level (Grid), and the twelfth (Distribution Network).

My score jumped to 5 972 041 points.

Based on the manual solutions I still had, I estimate it was possible to reach 6.4 million with this approach, even though the generic greedy algorithm was far from optimal.

I decided to stop there; my brain could no longer function, and I was exhausted!

Conclusion

I enjoyed this contest a lot. Relying on heuristics instead of trying to simulate all the game mechanisms was an excellent idea. This is the contest on which I spent the least time, though I reached my highest final rank: 40th out of 3970. Setting a dev environment to build and tweak the referee took time but was worth the effort; I'm sure it will be handy in my following CodinGame projects. Working on a plan before coding the first line again helped me to achieve my goals: I recommend anyone to try it at least once!

Congrats to each player, and thanks, CodinGame, for making an optimization challenge this year!